Impact of the Inline topology on your NSX-T data flow

Now that you know the different supported topologies, it would be good to know what the exact impact is on the data flow itself. How much traffic is passing through the load balancer and how is traffic passing through the different NSX-T Data Center components.

In case you have missed the first part:

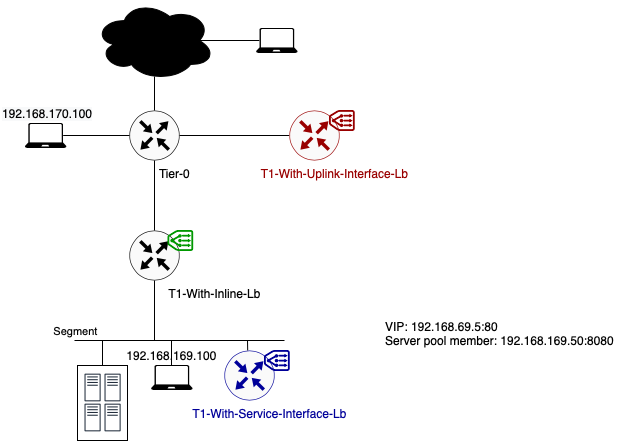

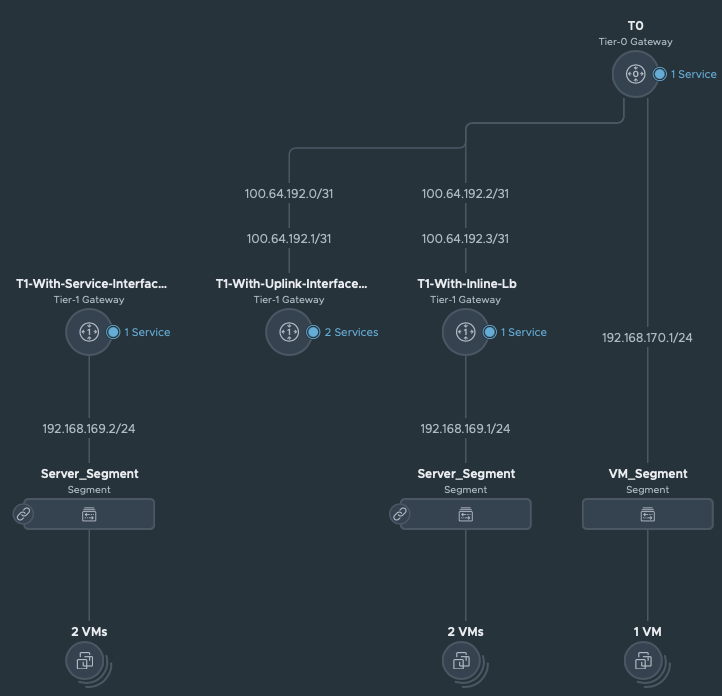

Lab Topology

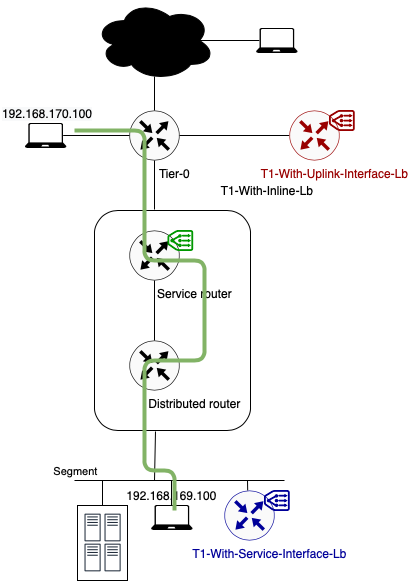

To explain the impact on the traffic flow, I will use the following basic setup. Different colours are showing the different topologies I will explained.

Note: Because of the limited lab size, the edge and compute nodes are running in a fully collapsed cluster. For more information, please reach out to me.

The server member behind the Virtual server IP is running a simple webserver on port 8080. The virtual server itself is listening on port 80.

Quick recap on the load balancer placement in NSX-T

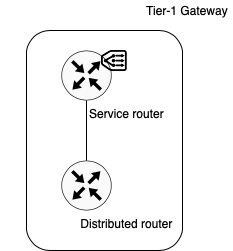

As I assumed in the beginning of this series, you should have a minimum knowledge of NSX-T. However here a short recap on the placement of the load balancing service within NSX-T.

As load balancing is a centralised service, it runs on the SR component of a Tier-1 Gateway. The “physical” location of that SR component is an edge node. Meaning that both components are possibly running on different hosts. Which has an impact on the exact data path. Keep this in mind when reading the following chapters.

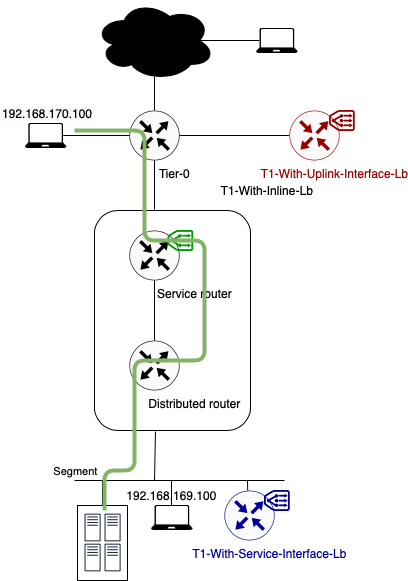

Inline Load Balancer

The data path when using an inline load balancer topology is quite straightforward: all traffic which is passing through the specific Tier-1 Gateway where the load balancer is deployed has to pass via the load balancer. As well traffic which doesn’t use the load balancing capabilities, has to pass through it.

VIP Placement

When using an inline load balancer, the VIP can be placed in the following subnets:

- T1 Downlink Segment

- T0 Uplink subnet

- A new dedicated loopback subnet

Data path example 1: Traffic from 192.168.170.100 to VIP 192.168.69.5 (TCP:80)

In this example, client and server are not located on the same segment nor behind the same Tier-1 Gateway.

- As you can see in the above diagram traffic flows from the source to its default gateway. The default gateway for segment 192.168.170.0/24 is located on the directly connected Tier-0 Gateway.

- This Tier-0 gateway knows where to route the traffic to (after checking its routing table). In this case the next hop is the Tier-1 Gateway called “T1-With-Inline-Lb. Because this T1 has centralised services deployed, traffic is passing through the T1 SR component first.

- Once traffic is encapsulated and tunnelled from the source ESXi host to the Edge node where the T1 SR component is hosted, it forwards it to the load balancer.

- After the load balancer did his magic (my way of saying that I will not go deeper into the load balancer process), the traffic knows what his final destination is. In this case VIP 192.168.69.5:80 is redirecting the traffic to my only server pool with webserver 192.168.169.50:8080. The traffic is sent back to the T1.

- The T1 SR checks its routing table and knows how to route traffic to this segment. In this example the webserver is located on a segment called Server_Segment. The next hop is the T1 DR component. This action is still performed on the edge node. The DR component of this T1 is distributed across all nodes who are participating in the specific Overlay Transport Zone.

- Once the traffic arrives on the T1 DR component, the routing process is over. From here on traffic is handled as Layer 2 (switching) as the Server_Segment is directly connected to this distributed router.

- The Edge host switch will first check its ARP Table to make the conversion between VM IP and VM MAC. Secondly the MAC address table is checked. Based on VM MAC, this table knows on which ESXi host (TEP IP) the final destination (webserver VM) is located.

- Traffic is encapsulated and tunnelled from the Edge node to the destination ESXi host.

- The receiving host decapsulates the traffic and sends it to the webserver 192.168.169.50.

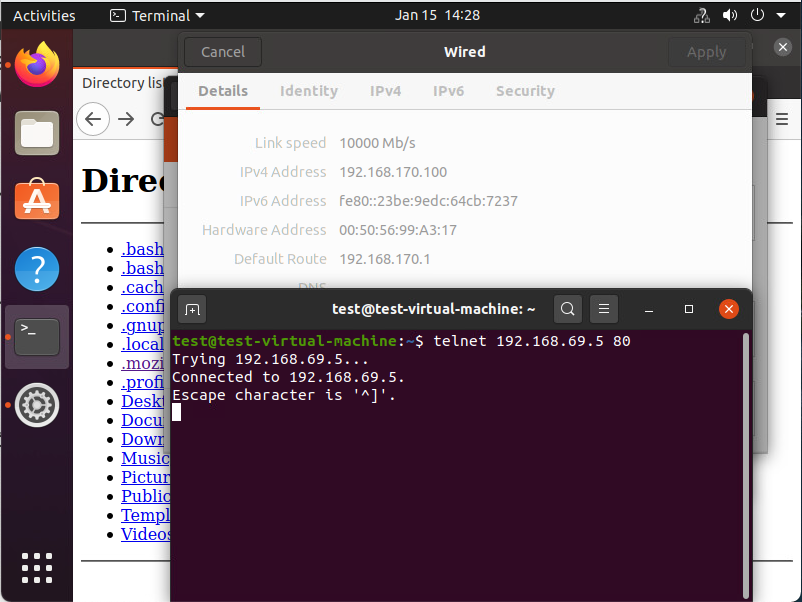

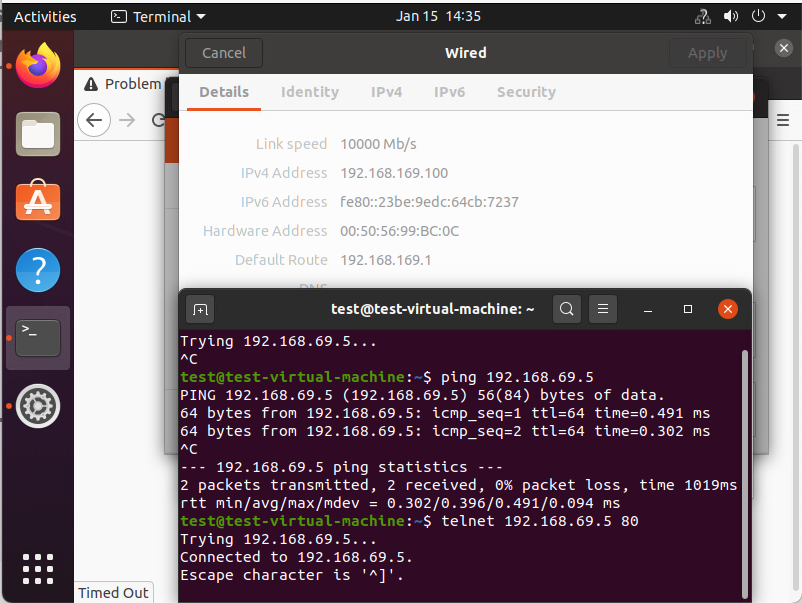

Underneath you can see that the traffic is reaching its final destination. In this screenshot I’m using telnet to check if port 80 on the VIP is opened from the source VM.

Data path example 2: Traffic from 192.168.170.100 to 192.168.169.100 (ICMP)

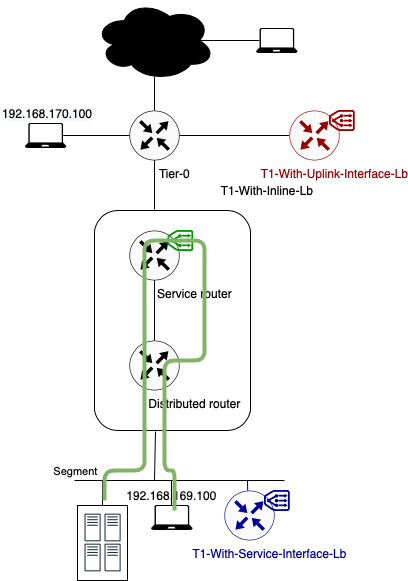

During this second example, I’m sending traffic which is not destined for a VIP on the load balancer. How does the traffic flows in this case? Let’s check it out.

As you probably can see, the traffic flows quite similar as in the first example. The only difference is that in this specific example the traffic is not matched on any load balancer configuration. Although traffic was never destined for the load balancer, it is still passing via the T1 SR component (on edge node). The consequence is that the traffic is making more hops that it should in an ideal situation (when all routers are distributed).

We can state that whenever a centralised load balanced service is deployed in an inline manner on a Tier-1, traffic needs to pass via the SR component (and load balancer) located on the Edge node.

Data path example 3: Traffic from 192.168.169.100 to VIP 192.168.69.5 (TCP:80)

- Source traffic is send to its default gateway in the directly connected T1 Gateway.

- This T1 knows the VIP destination because it’s configured on the same T1. As it’s a centralised services it needs to be encapsulated and tunnelled towards the edge node.

- Again the load balancer is performing his magic.

- The next steps are similar to the ones in the first example. The T1 handles the routing towards the load balanced server on the Server_Segment.

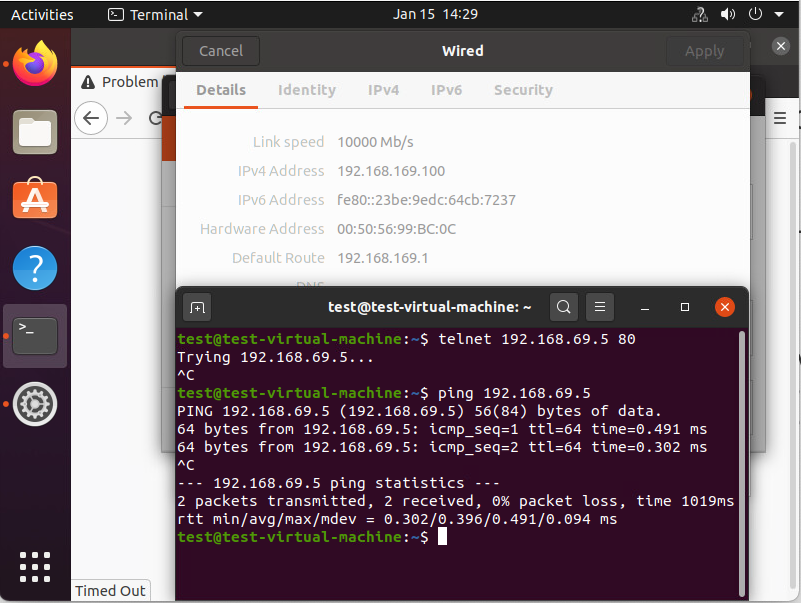

Although the path looks very similar to the other previous examples, I’m not able to reach the webserver over VIP:80. In the underneath screenshot you can see that the traffic is not reaching its final destination. A telnet on VIP port 80 is not successful. A simple ping to the VIP is running fine. What is wrong??

In part 1 I’ve mentioned that SNAT is not a requirement for inline load balancing. But this is only true on one specific condition: the client and server may not be connected to the same Tier-1 Gateway. When SNAT is not enabled, the server sees an incoming packet directly from the client (in this example: 192.168.169.100.) When the server sends his reply, he can send it directly to this client IP.

So in case that client and server are connected to the same segment or Tier-1 Gateway, the return traffic can be routed directly without the need of passing via the T1 SR component which is hosting the load balancer. This causes returning traffic not to pass through the load balancer. Because of this asymmetric routing behaviour the initial TCP connection will fail and traffic is dropped.

To get this solved, just enable SNAT on the load balancer. This forces the return traffic to go via the load balancer. underneath screenshot shows that the telnet connection on port 80 is working now. The load balancer webserver is accessible from the client.

Up Next: The Impact Of The Load Balancing Service on your NSX-T Data Center Data Flow Part 3: One-Arm Topology

In the first post of this series I’ve explained the two different supported load balancing topologies. In Part 2 I explained what the impact on the data flow was for the inline topology. So in Part 3 I will continue with the impact on the data flow for the one-arm load balancer topology.